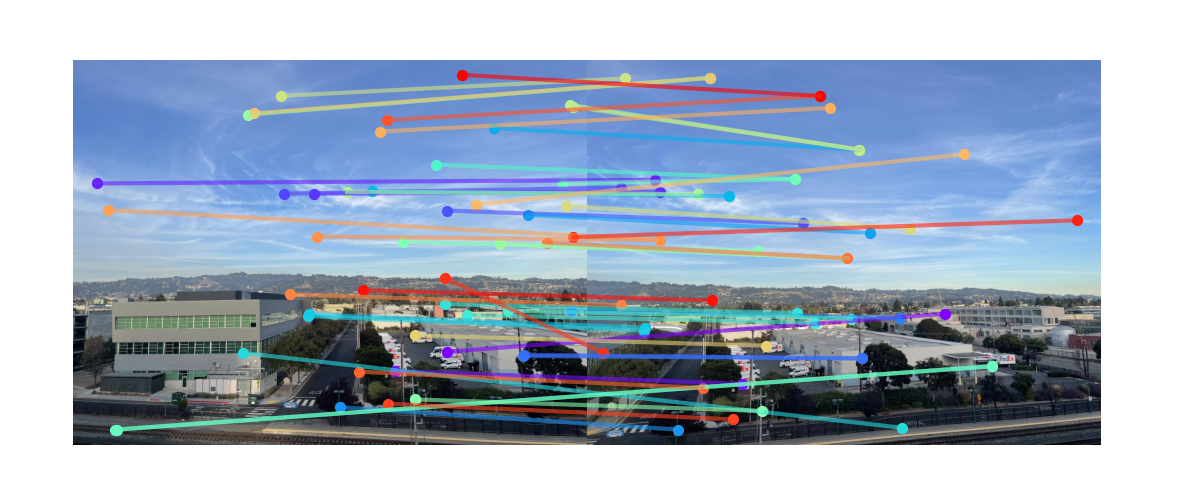

Another fun thing homography can achieve is image stiching. When two pictures are taken from the same center of projection, we can use homography to transform the first image to the space of second image.

To align the images, we first calculate the shift between image1 undergoes after homography transformation. Then, we apply the same shift to image2 to align the images. Note that the alignment is not perfect, because we

calculated our homography transformation using least square, but this error is minor and not noticeable in stiching.

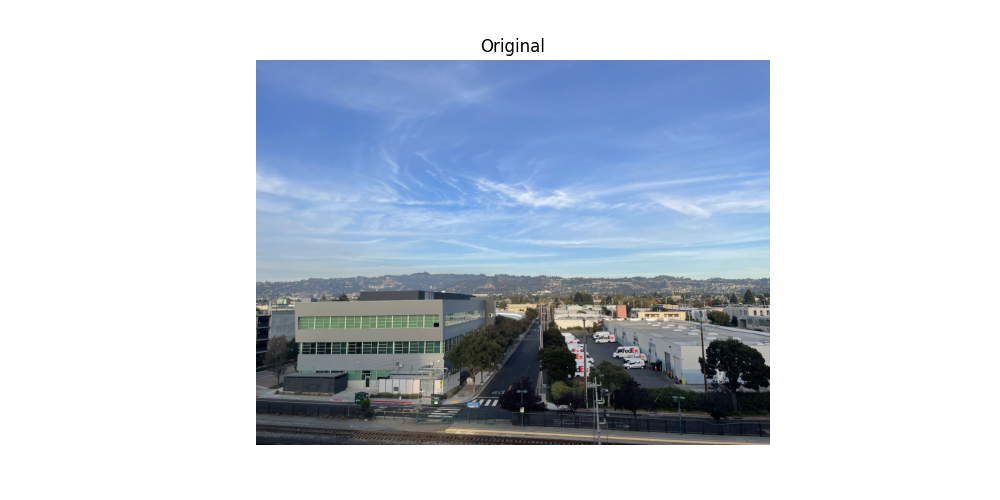

Simply aligning the image is not enough for a good stiching. We also need a way to smooth out the region where the images overlap. If we naively take the average of the two images, we can see a very clear edge at the overlap region.

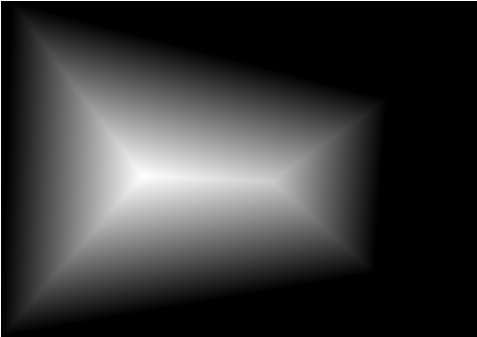

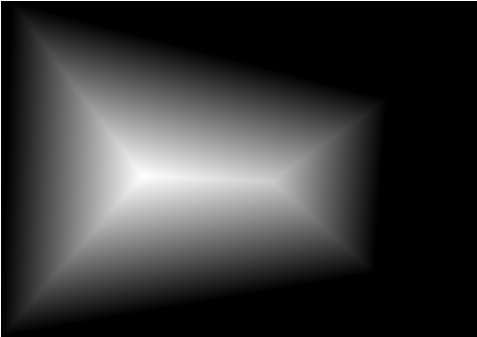

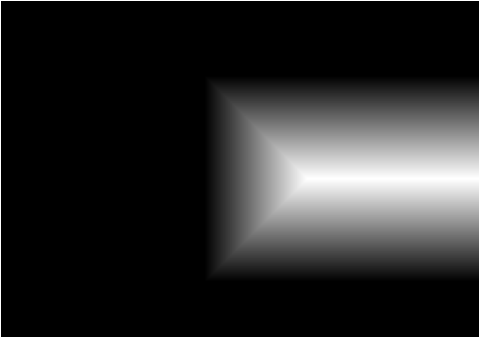

To fix this, I will be using two band blending, where I first calculate the L2 distance transform of the alpha masks (which pixels are used in the final result), I then use the normalized distance transform of two pictures to perform blending.

Specifically, I first find the high frequency and low frequency components of the two images. For high frequency, the pixel where it's distance transform value is greater will be taken between the two images. For low frequency, the distance transform serves as weights

for a weighted average of the two images.

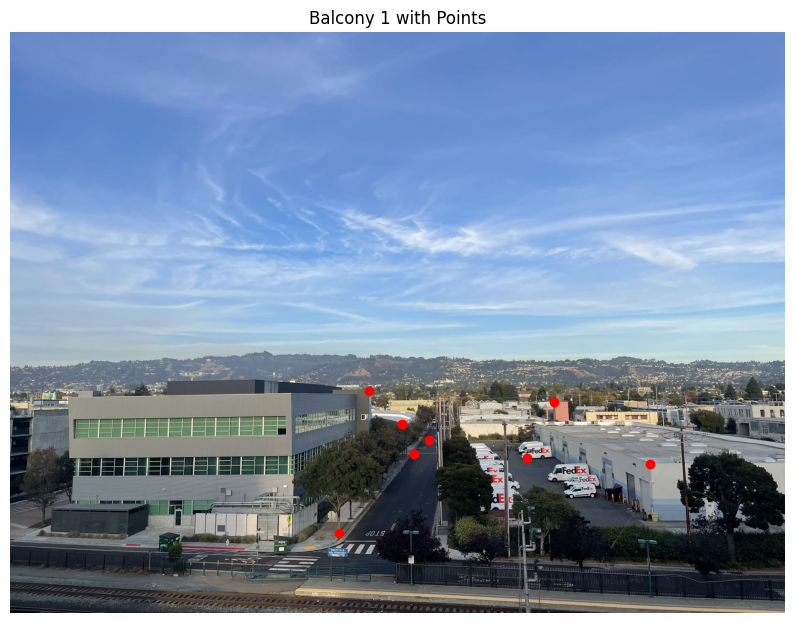

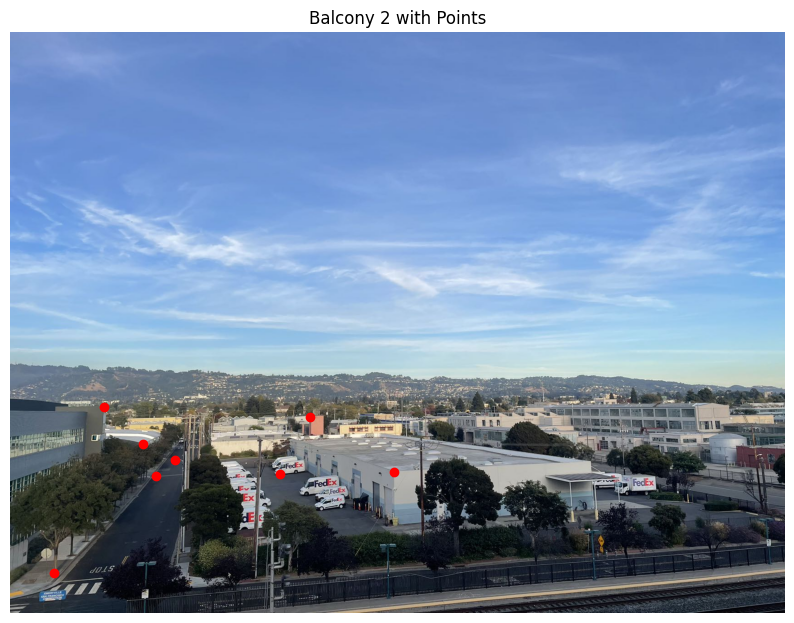

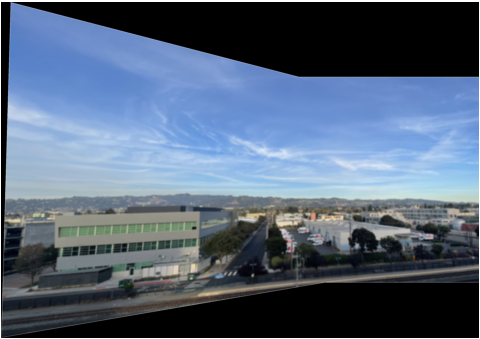

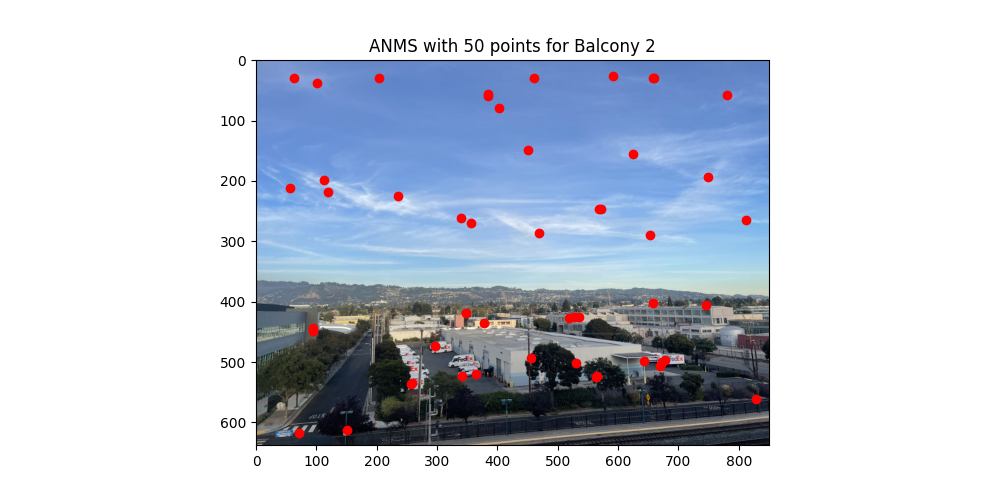

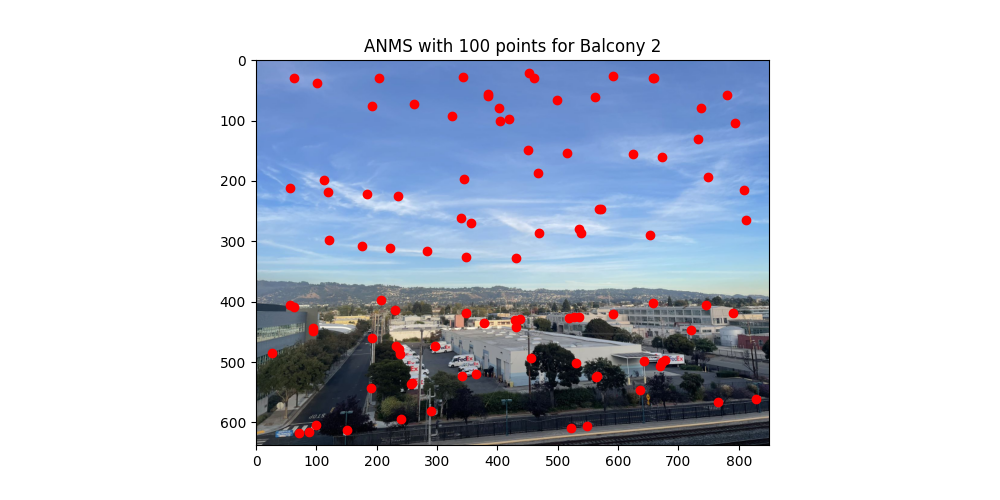

balcony left image

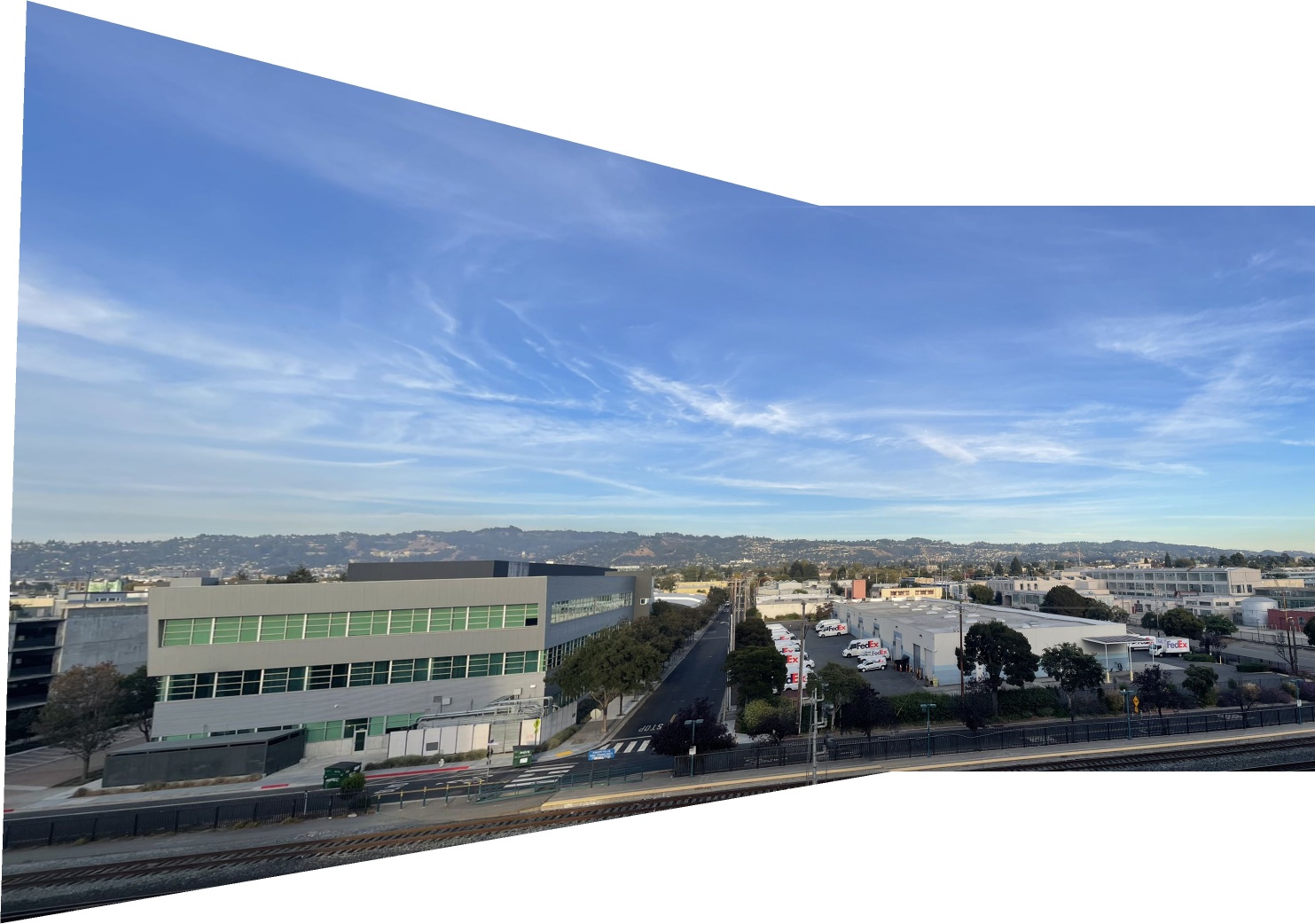

balcony right image

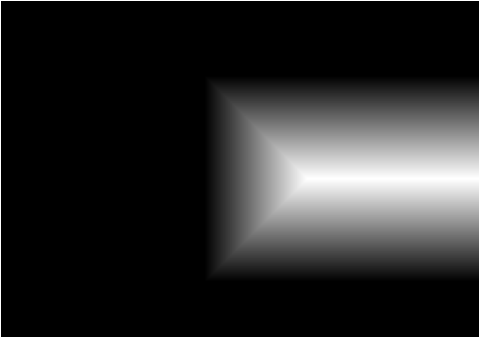

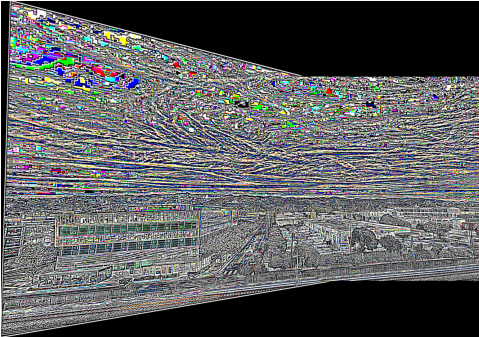

L2 distance transform of the alpha mask of the balcony left image.

L2 distance transform of the alpha mask of the balcony right image.

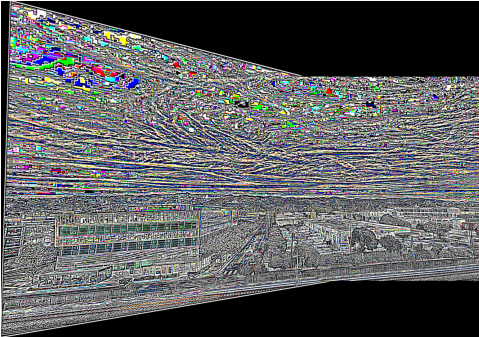

low band blending

high band blending

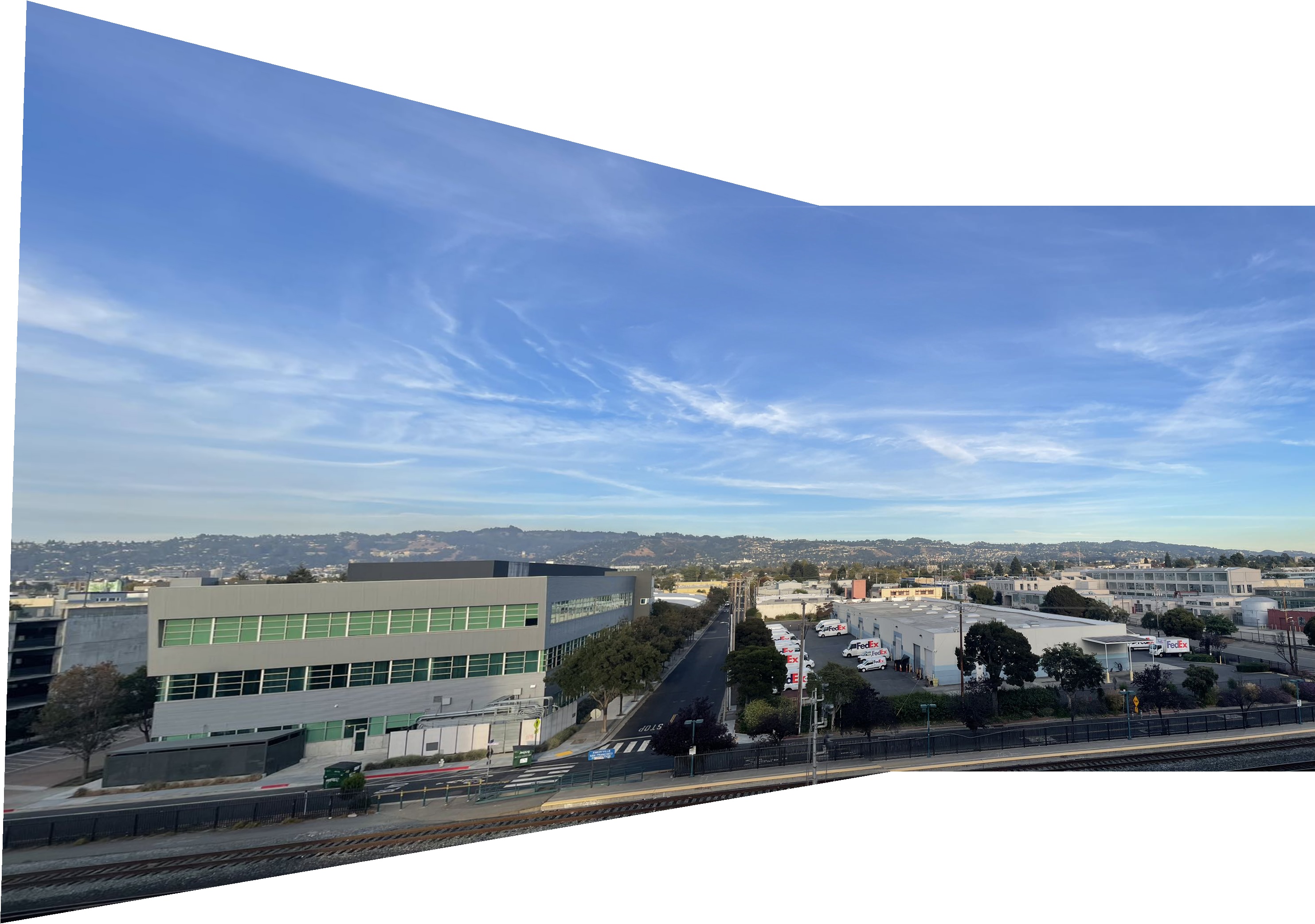

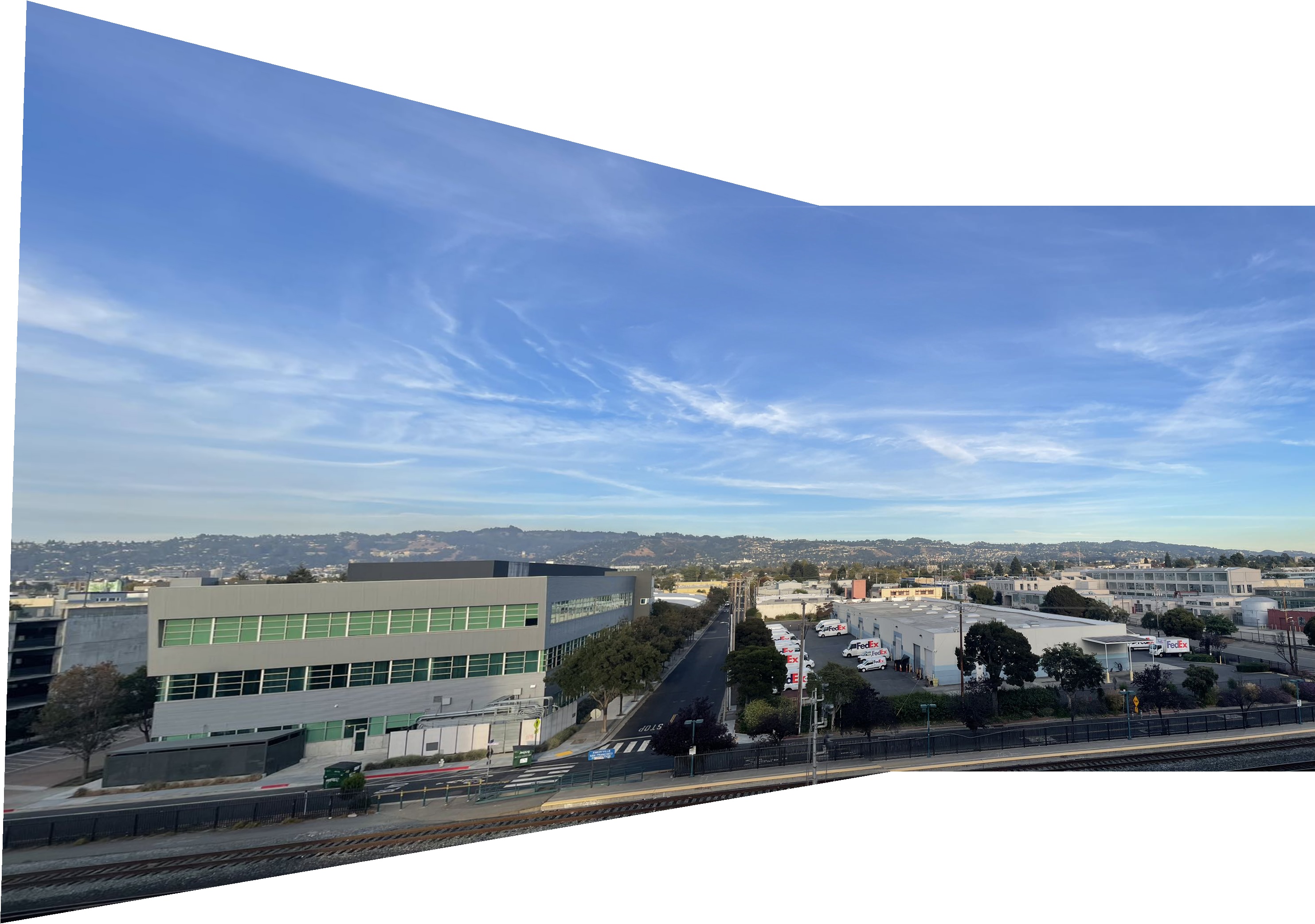

Final stitched balcony image

Here are a few more examples, the distance transform and 2 band results are not shown for simplicity of the webpage.

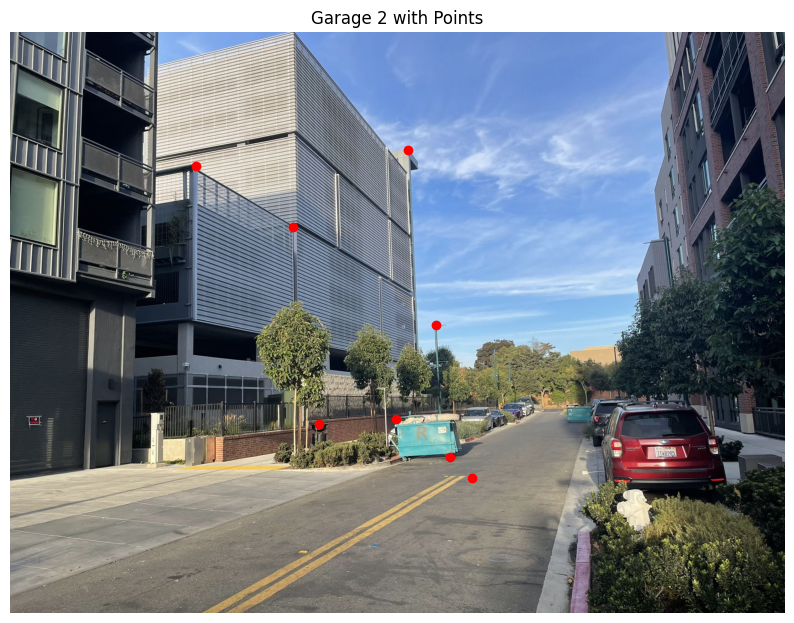

garage left image

garage right image

Final stitched garage image